Linux Network Namespace

Linux namespace 的概念

Linux 内核从版本 2.4.19 开始陆续引入了 namespace 的概念。其目的是将某个特定的全局系统资源(global system resource)通过抽象方法使得 namespace 中的进程看起来拥有它们自己的隔离的全局系统资源实例(The purpose of each namespace is to wrap a particular global system resource in an abstraction that makes it appear to the processes within the namespace that they have their own isolated instance of the global resource. )。Linux 内核中实现了六种 namespace,按照引入的先后顺序,列表如下:

| namespace | 引入的相关内核版本 | 被隔离的全局系统资源 | 在容器语境下的隔离效果 |

|---|---|---|---|

| Mount namespaces | Linux 2.4.19 | 文件系统挂接点 | 每个容器能看到不同的文件系统层次结构 |

| UTS namespaces | Linux 2.6.19 | nodename 和 domainname | 每个容器可以有自己的 hostname 和 domainame |

| IPC namespaces | Linux 2.6.19 | 特定的进程间通信资源,包括 System V IPC 和 POSIX message queues | 每个容器有其自己的 System V IPC 和 POSIX 消息队列文件系统,因此,只有在同一个 IPC namespace 的进程之间才能互相通信 |

| PID namespaces | Linux 2.6.24 | 进程 ID 数字空间 (process ID number space) | 每个 PID namespace 中的进程可以有其独立的 PID; 每个容器可以有其 PID 为 1 的 root 进程;也使得容器可以在不同的 host 之间迁移,因为 namespace 中的进程 ID 和 host 无关了。这也使得容器中的每个进程有两个 PID:容器中的 PID 和 host 上的 PID。 |

| Network namespaces | 始于 Linux 2.6.24 完成于 Linux 2.6.29 | 网络相关的系统资源 | 每个容器用有其独立的网络设备,IP 地址,IP 路由表,/proc/net 目录,端口号等等。这也使得一个 host 上多个容器内的同一个应用都绑定到各自容器的 80 端口上。 |

| User namespaces | 始于 Linux 2.6.23 完成于 Linux 3.8) | 用户和组 ID 空间 | 在 user namespace 中的进程的用户和组 ID 可以和在 host 上不同; 每个 container 可以有不同的 user 和 group id;一个 host 上的非特权用户可以成为 user namespace 中的特权用户; |

Linux Network Namespace

network namespace 是实现网络虚拟化的重要功能,它能创建多个隔离的网络空间,它们有独自的网络栈信息。不管是虚拟机还是容器,运行的时候仿佛自己就在独立的网络中。

1# 基于busybox镜像构建容器test1

2[root@localhost ~]# docker run -d --name test1 busybox /bin/sh -c "while true; do sleep 3600; done"

3# 查看test1容器的 Network Namespace

4[root@localhost ~]# docker exec -it test1 /bin/sh

5/ # ip a

61: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

7 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

8 inet 127.0.0.1/8 scope host lo

9 valid_lft forever preferred_lft forever

102: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

11 link/ipip 0.0.0.0 brd 0.0.0.0

12149: eth0@if150: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

13 link/ether 02:42🇦🇨11:00:03 brd ff:ff:ff:ff:ff:ff

14 inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

15 valid_lft forever preferred_lft forever

16

17# 创建容器test2 并查看test2容器的 Network Namespace

18[root@localhost ~]# docker run -d --name test2 busybox /bin/sh -c "while true; do sleep 3600; done"

190e3046ec1d7ea5845db3cd03cdc683bb03ebf897e4d55d30599f06d26f89a365

20[root@localhost ~]# docker exec test2 ip a

211: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

22 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

23 inet 127.0.0.1/8 scope host lo

24 valid_lft forever preferred_lft forever

252: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

26 link/ipip 0.0.0.0 brd 0.0.0.0

27151: eth0@if152: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

28 link/ether 02:42🇦🇨11:00:04 brd ff:ff:ff:ff:ff:ff

29 inet 172.17.0.4/16 brd 172.17.255.255 scope global eth0

30 valid_lft forever preferred_lft forever

31

32# 查看宿主机的 Network Namespace

33[root@localhost ~]# ip a

341: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

35 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

36 inet 127.0.0.1/8 scope host lo

37 valid_lft forever preferred_lft forever

38 inet6 ::1/128 scope host

39 valid_lft forever preferred_lft forever

402: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

41 link/ether 00:0c:29:3d:e4:f6 brd ff:ff:ff:ff:ff:ff

42 inet 192.168.31.210/24 brd 192.168.31.255 scope global noprefixroute eno16777736

43 valid_lft forever preferred_lft forever

44 inet6 fe80::20c:29ff:fe3d:e4f6/64 scope link noprefixroute

45 valid_lft forever preferred_lft forever

463: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

47 link/ether 02:42:26:09:df:f1 brd ff:ff:ff:ff:ff:ff

48 inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

49 valid_lft forever preferred_lft forever

50 inet6 fe80::42:26ff:fe09:dff1/64 scope link

51 valid_lft forever preferred_lft forever

524: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

53 link/ipip 0.0.0.0 brd 0.0.0.0

54146: veth7e65841@if145: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

55 link/ether 8a🇧🇩28:39:22:b8 brd ff:ff:ff:ff:ff:ff link-netnsid 0

56 inet6 fe80::88bd:28ff:fe39:22b8/64 scope link

57 valid_lft forever preferred_lft forever

58150: veth8840821@if149: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

59 link/ether 36:f2:e2:24:7b:29 brd ff:ff:ff:ff:ff:ff link-netnsid 1

60 inet6 fe80::34f2:e2ff:fe24:7b29/64 scope link

61 valid_lft forever preferred_lft forever

62

63# 进入test1容器 发现可以ping同test2容器的eth0接口上的地址。

64[root@localhost ~]# docker exec -it test1 /bin/sh

65/ # ping 172.17.0.4

66PING 172.17.0.4 (172.17.0.4): 56 data bytes

6764 bytes from 172.17.0.4: seq=0 ttl=64 time=0.151 ms

6864 bytes from 172.17.0.4: seq=1 ttl=64 time=0.109 ms

Linux Network Namespace

1# 查看network namespace

2[root@localhost ~]# ip netns list

3

4# 删除network namespace

5[root@localhost ~]# ip netns delete test1

6

7# 创建network namespace

8[root@localhost ~]# ip netns add test1

9[root@localhost ~]# ip netns add test2

10

11# 查看指定network namespace的详细信息

12[root@localhost ~]# ip netns exec test1 ip a

131: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

14 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

152: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

16 link/ipip 0.0.0.0 brd 0.0.0.0

17

18# 查看指定name space的接口信息

19[root@localhost ~]# ip netns exec test1 ip link

201: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

21 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

222: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN mode DEFAULT group default qlen 1000

23 link/ipip 0.0.0.0 brd 0.0.0.0

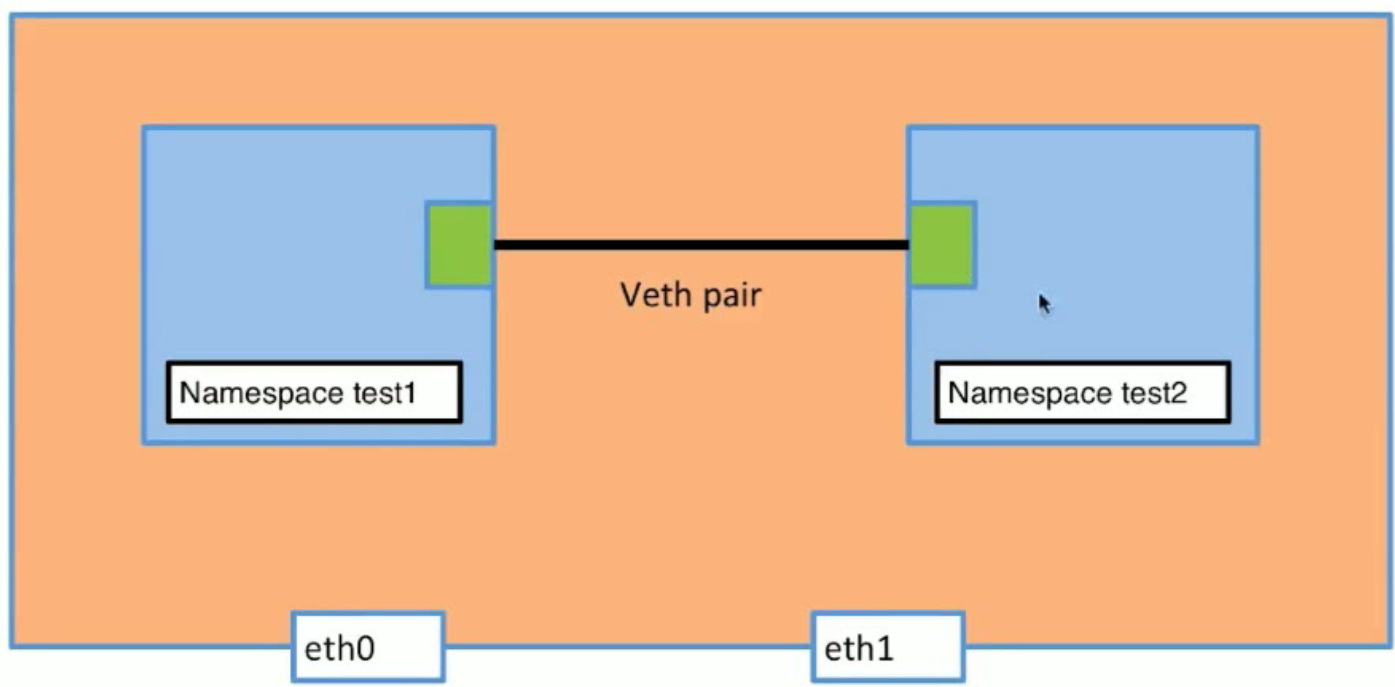

Network Namespace 互联之 veth-pair

顾名思义,veth-pair 就是一对的虚拟设备接口,和 tap/tun 设备不同的是,它都是成对出现的。一端连着协议栈,一端彼此相连着。

正因为有这个特性,它常常充当着一个桥梁,连接着各种虚拟网络设备,典型的例子像“两个 namespace 之间的连接”,“Bridge、OVS 之间的连接”,“Docker 容器之间的连接” 等等,以此构建出非常复杂的虚拟网络结构,比如 OpenStack Neutron。

1# 创建一对veth接口分别是veth-test1、veth-test2

2[root@localhost ~]# ip link add veth-test1 type veth peer name veth-test2

3

4# 创建一对veth接口:有mac,没有ip,状态都是down

5[root@localhost ~]# ip link | grep veth-

6153: veth-test2@veth-test1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

7154: veth-test1@veth-test2: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

8

9# 将veth-test1接口添加到 Network Namespace test1中

10[root@localhost ~]# ip link set veth-test1 netns test1

11# 发现veth-test1已经添加到 Network Namespace test1中

12[root@localhost ~]# ip nets exec test1 ip link

13Object "nets" is unknown, try "ip help".

14[root@localhost ~]# ip netns exec test1 ip link

151: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

16 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

172: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN mode DEFAULT group default qlen 1000

18 link/ipip 0.0.0.0 brd 0.0.0.0

19154: veth-test1@if153: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

20 link/ether a2:09:2b:e9:d0:75 brd ff:ff:ff:ff:ff:ff link-netnsid 0

21# 查看本机接口,发现veth-test1消失了。

22[root@localhost ~]# ip link | grep veth-

23153: veth-test2@if154: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

24

25# 同理添加veth-test2 到 Network NameSpace test2中

26[root@localhost ~]# ip link set veth-test2 netns test2

27# 发现veth-test2也消失了。

28[root@localhost ~]# ip link | grep veth-

29

30# 分别为Network NameSpace test1和test2 的veth接口添加地址

31[root@localhost ~]# ip netns exec test1 ip addr add 192.168.1.11/24 dev veth-test1

32[root@localhost ~]# ip netns exec test2 ip addr add 192.168.1.12/24 dev veth-test2

33

34# 分别开启Network NameSpace veth接口

35[root@localhost ~]# ip netns exec test1 ip link set dev veth-test1 up

36[root@localhost ~]# ip netns exec test2 ip link set dev veth-test2 up

37

38# 分别查看Network NameSpace veth接口的状态

39[root@localhost ~]# ip netns exec test1 ip a | grep veth

40154: veth-test1@if153: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

41 inet 192.168.1.11/24 scope global veth-test1

42[root@localhost ~]# ip netns exec test2 ip a | grep veth

43153: veth-test2@if154: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

44 inet 192.168.1.12/24 scope global veth-test2

45

46# 通过Network NameSpace test1 的veth接口 ping test2 的veth接口。

47[root@localhost ~]# ip netns exec test1 ping 192.168.1.12

48PING 192.168.1.12 (192.168.1.12) 56(84) bytes of data.

4964 bytes from 192.168.1.12: icmp_seq=1 ttl=64 time=0.133 ms

5064 bytes from 192.168.1.12: icmp_seq=2 ttl=64 time=0.062 ms

51

52# 通过Network NameSpace test2 的veth接口 ping test1 的veth接口。

53[root@localhost ~]# ip netns exec test2 ping 192.168.1.11

54PING 192.168.1.11 (192.168.1.11) 56(84) bytes of data.

5564 bytes from 192.168.1.11: icmp_seq=1 ttl=64 time=0.096 ms

5664 bytes from 192.168.1.11: icmp_seq=2 ttl=64 time=0.065 ms

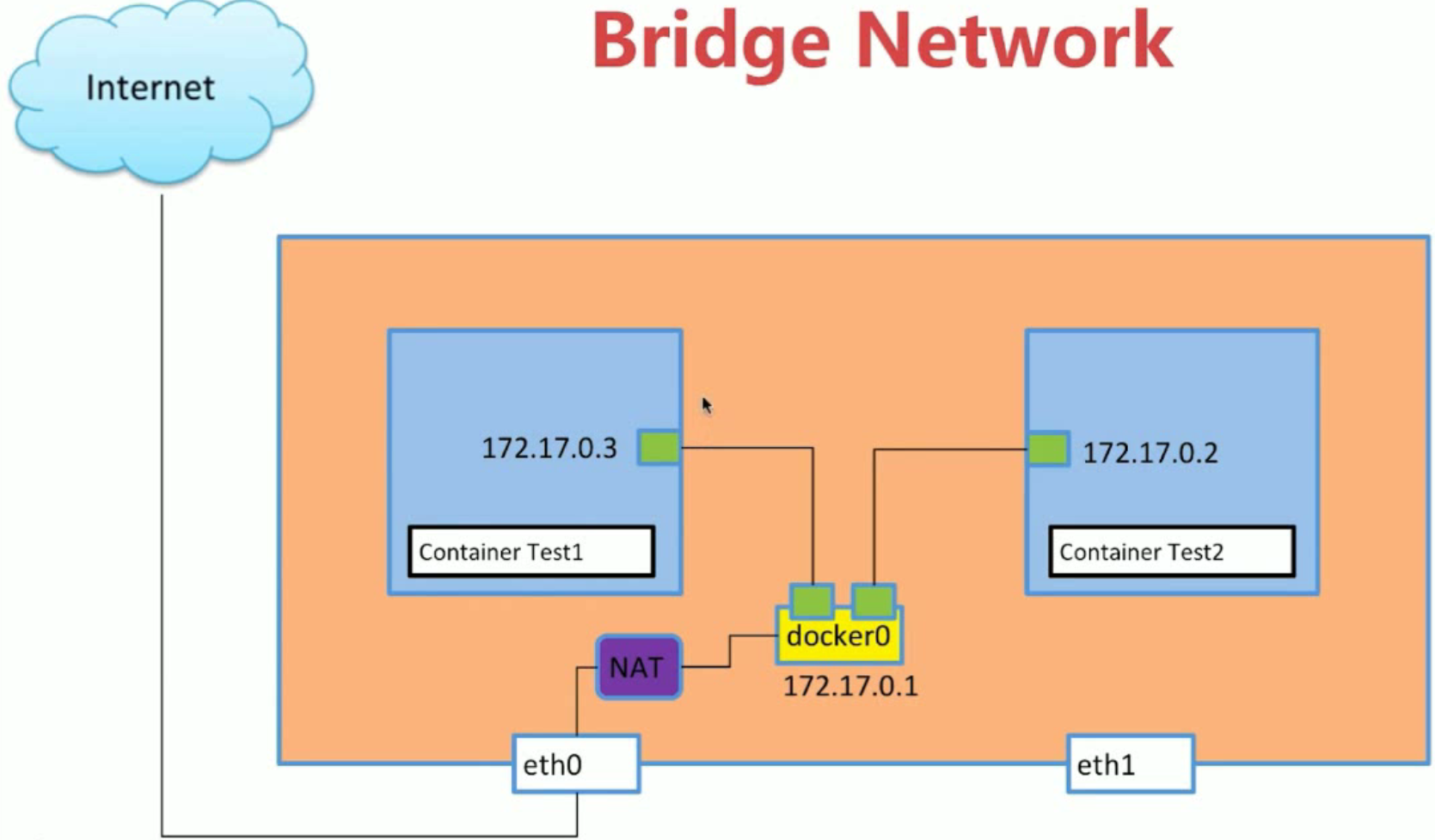

Bridge

容器之间通信的原理

各个容器通过 veth-pair 与本地 docker0 互联,从而间接的实现了容器之间的通信。

容器连接 Internet 的原理

容器通过与宿主机的 docker0,通过具体的物理网络接口并以 NAT 技术实现对 Internet 的访问。

1# 查看docker网络

2[root@localhost ~]# docker network ls

3NETWORK ID NAME DRIVER SCOPE

48322f6f7f661 bridge bridge local

515bcd4ce7873 host host local

614172bae898c none null local

7

8# 查看bridge网络信息 发现容器test1默认使用了 bridge网络

9[root@localhost ~]# docker network inspect bridge

10"Containers": {"0ee36a7343bee417894cc3b0dea527e28d6d66679736902c29c90a7aee7f6ddb": {

11 "Name": "test1",

12 "EndpointID": "4044e0d6f348b36bc1ad6428eeb7ea66b1c693530f9a22334572fa4f0d039c59",

13 "MacAddress": "02:42🇦🇨11:00:03",

14 "IPv4Address": "172.17.0.3/16",

15 "IPv6Address": ""

16 }

17}

18

19# 发现容器中的veth149与本机的veth150是一对veth,目的是为了让容器test1与宿主机docker0两个不同的Network Namespace之间可以通信。也就是说本地的veth150与本机的docker0是相连的。

20[root@localhost ~]# ip a #查看本机的网络信息

213: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

22 link/ether 02:42:26:09:df:f1 brd ff:ff:ff:ff:ff:ff

23 inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

24 valid_lft forever preferred_lft forever

25 inet6 fe80::42:26ff:fe09:dff1/64 scope link

26 valid_lft forever preferred_lft forever

27150: veth8840821@if149: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

28 link/ether 36:f2:e2:24:7b:29 brd ff:ff:ff:ff:ff:ff link-netnsid 1

29 inet6 fe80::34f2:e2ff:fe24:7b29/64 scope link

30 valid_lft forever preferred_lft forever

31[root@localhost ~]# docker exec test1 ip a #查看容器test1中的网络信息

321: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

33 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

34 inet 127.0.0.1/8 scope host lo

35 valid_lft forever preferred_lft forever

362: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

37 link/ipip 0.0.0.0 brd 0.0.0.0

38149: eth0@if150: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

39 link/ether 02:42🇦🇨11:00:03 brd ff:ff:ff:ff:ff:ff

40 inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

41 valid_lft forever preferred_lft forever

42

43# 使用test1容器ping 宿主机的docker0

44root@localhost ~]# docker exec test1 ping 172.17.0.1

45PING 172.17.0.1 (172.17.0.1): 56 data bytes

4664 bytes from 172.17.0.1: seq=0 ttl=64 time=0.127 ms

4764 bytes from 172.17.0.1: seq=1 ttl=64 time=0.107 ms

48

49# 如何查看证明:本地的veth150与本机的docker0是相连的

50[root@localhost ~]# yum install bridge-utils

51[root@localhost ~]# ip a | grep 150:

52150: veth8840821@if149: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

53[root@localhost ~]# brctl show

54bridge name bridge id STP enabled interfaces

55docker0 8000.02422609dff1 no veth7e65841

56 veth8840821

57 vetha7006c1

容器之间的 link

- 创建容器时如果没有指定 bridge,会使用默认的 bridge。

- 创建容器时,link 指定容器,这样新创新的容器就可以以容器名字的方式访问 link 指定的容器。

- 创建容器时可以指定自定义 bridge,加入同一个自定义(非默认 bridge)的容器之间可以通过使用容器名通信。

1# 创建test2容器时指定link test1容器

2[root@localhost ~]# docker run -d --name test2 --link test1 busybox /bin/sh -c "while true; do sleep 3600; done"

3

4# 查看test2容器的ip地址: 72.17.0.4/16

5[root@localhost ~]# docker exec test2 ip a

61: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

7 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

8 inet 127.0.0.1/8 scope host lo

9 valid_lft forever preferred_lft forever

102: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

11 link/ipip 0.0.0.0 brd 0.0.0.0

12155: eth0@if156: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

13 link/ether 02:42🇦🇨11:00:04 brd ff:ff:ff:ff:ff:ff

14 inet 172.17.0.4/16 brd 172.17.255.255 scope global eth0

15 valid_lft forever preferred_lft forever

16

17# test2以ip的方式ping test1容器,没问题。

18[root@localhost ~]# docker exec test2 ping 172.17.0.3

19PING 172.17.0.3 (172.17.0.3): 56 data bytes

2064 bytes from 172.17.0.3: seq=0 ttl=64 time=0.347 ms

21

22# test2还可以以容器名作为ping的参数(因为在创建时候指定了link),link的方式类似于添加了一个dns的记录,因为test2可以不需要知道test1的具体ip地址,使用容器名称进行访问。

23[root@localhost ~]# docker exec test2 ping test1

24PING test1 (172.17.0.3): 56 data bytes

2564 bytes from 172.17.0.3: seq=0 ttl=64 time=0.084 ms

26

27# test1可以使用ip地址ping test2

28[root@localhost ~]# docker exec test1 ping 172.17.0.4

29PING 172.17.0.4 (172.17.0.4): 56 data bytes

3064 bytes from 172.17.0.4: seq=0 ttl=64 time=0.215 ms

31

32# test1无法使用容器名称作为ping的参数

33[root@localhost ~]# docker exec test1 ping test2

34ping: bad address 'test2'

35

36# 自定义bridge类型的网络

37[root@localhost ~]# docker network create -d bridge my-bridge

380eb1d64f0e7d39a1605cecbde3aa37f9ed64fd00f1b8205880e046e50e34b234

39[root@localhost ~]# docker network ls

40NETWORK ID NAME DRIVER SCOPE

418322f6f7f661 bridge bridge local

4215bcd4ce7873 host host local

430eb1d64f0e7d my-bridge bridge local

4414172bae898c none null local

45

46# 查看当前本地所有bridge网络:br-0eb1d64f0e7d 就是刚才新创建的自定义bridge网络

47[root@localhost ~]# brctl show

48bridge name bridge id STP enabled interfaces

49br-0eb1d64f0e7d 8000.0242e925741a no

50docker0 8000.02422609dff1 no veth57ea7d0

51 veth7e65841

52 veth8840821

53

54# 创建容器test3,并指定自定义bridge

55[root@localhost ~]# docker run -d --name test3 --network my-bridge busybox /bin/sh -c "while true; do sleep 3600; done"

56

57# 查看所有bridge类型的网络 : 新的bridge已经有了interfaces

58[root@localhost ~]# brctl show

59bridge name bridge id STP enabled interfaces

60br-0eb1d64f0e7d 8000.0242e925741a no veth1cfd21f

61docker0 8000.02422609dff1 no veth57ea7d0

62 veth7e65841

63 veth8840821

64

65# 验证新接口

66[root@localhost ~]# ip a | grep veth1cfd21f

67159: veth1cfd21f@if158: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-0eb1d64f0e7d state UP group default # 查看my-bridge中的Containers列表:发现test3容器已经加入my-bridge网络中。并且ip已经从 172.17.0.0网络变为 172.18.0.0。

68[root@localhost ~]# docker network inspect 0eb1d64f0e7d | grep test

69 "Name": "test3",

70 IPv4Address": "172.18.0.2/16"

71

72

73# 将已存在的容器加入:指定的bridge网络中。(一个容器可以同时加入多个不同的bridge网络中)

74[root@localhost ~]# docker network connect my-bridge test2

75[root@localhost ~]# docker network inspect 0eb1d64f0e7d | grep test

76 "Name": "test3", "IPv4Address": "172.18.0.2/16",

77 "Name": "test2", "IPv4Address": "172.18.0.3/16",

78# test3 ping test2 : 已经现在test2也已经加入了my-bridge网络,所以可以使用容器名称作为Ping的参数,使用ip作为参数也可。

79[root@localhost ~]# docker exec test3 ping test2

80PING test2 (172.18.0.3): 56 data bytes

8164 bytes from 172.18.0.3: seq=0 ttl=64 time=0.123 ms

82[root@localhost ~]# docker exec test3 ping 172.18.0.3

83PING 172.18.0.3 (172.18.0.3): 56 data bytes

8464 bytes from 172.18.0.3: seq=0 ttl=64 time=0.088 ms

85

86# test2 同时连接了docker0默认bridge ,有连接了my-bridge

87[root@localhost ~]# docker exec test2 ip a

881: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

89 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

90 inet 127.0.0.1/8 scope host lo

91 valid_lft forever preferred_lft forever

922: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

93 link/ipip 0.0.0.0 brd 0.0.0.0

94155: eth0@if156: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

95 link/ether 02:42🇦🇨11:00:04 brd ff:ff:ff:ff:ff:ff

96 inet 172.17.0.4/16 brd 172.17.255.255 scope global eth0

97 valid_lft forever preferred_lft forever

98160: eth1@if161: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

99 link/ether 02:42🇦🇨12:00:03 brd ff:ff:ff:ff:ff:ff

100 inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1

101 valid_lft forever preferred_lft forever

102

103# 如果test2创建的时候没有指定link test1 那么,虽然test2和test1都默认连接了docker0(默认bridge不支持使用容器名称访问),如果讲test1也连接到my-bridge上面,test2就则可以使用容器名称访问,此时与test1通信的网络地址是172.18.0.0网段。

104[root@localhost ~]# docker network connect my-bridge test1

105[root@localhost ~]# docker exec test2 ping test1

106PING test1 (172.18.0.4): 56 data bytes

107

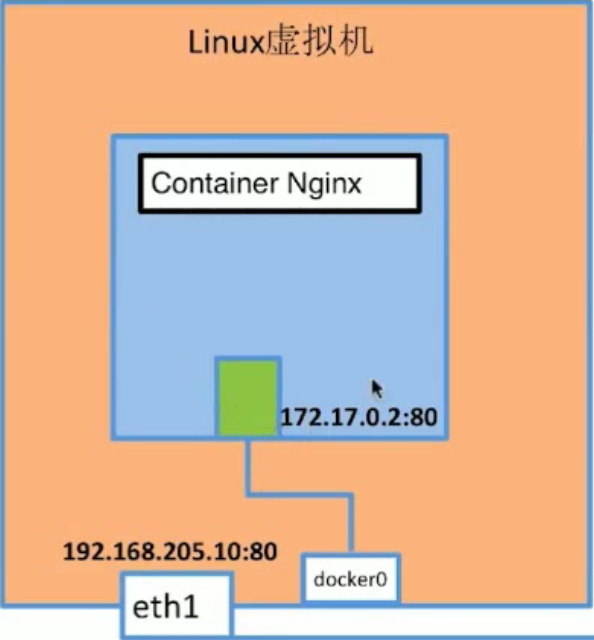

容器的端口映射

1# 启动容器基于nginx镜像

2[root@localhost ~]# docker run --name web -d nginx

3

4# 查看bridge网络,查找Containers中的所有容器中名为web的容器,查看ip地址

5[root@localhost ~]# docker network inspect bridge

6 "4f6d5934695246edc343a348bc40f434a44f7db5de1eeefb77643574fffdd9d0": {

7 "Name": "web",

8 "EndpointID": "3d663a4e558db41a77e7978aa0d5d42a93445e917a9e5c94ca3786242e17484d",

9 "MacAddress": "02:42🇦🇨11:00:05",

10 "IPv4Address": "172.17.0.5/16",

11 "IPv6Address": ""

12 },

13

14# ping ok

15[root@localhost ~]# ping 172.17.0.5

16PING 172.17.0.5 (172.17.0.5) 56(84) bytes of data.

1764 bytes from 172.17.0.5: icmp_seq=1 ttl=64 time=0.160 ms

18

19# curl 访问也 ok

20[root@localhost ~]# curl http://172.17.0.5

21<!DOCTYPE html>

22<html>

23<head>

24<title>Welcome to nginx!</title>

25<style>

26

27# 让容器服务暴露在宿主机的物理网络中

28[root@localhost ~]# docker run --name web -d -p 81:80 nginx

29[root@localhost ~]# curl http://127.0.0.1:81

30<!DOCTYPE html>

31<html>

32<head>

33<title>Welcome to nginx!</title>

34<style>

None Network

- 适用于仅本地访问的情形。

1#

2[root@localhost flask-hello-world]# docker run -d --name test4 --network none busybox /bin/sh -c "while true; do sleep 3600; done"

3# 发现没有IP和MAC地址

4[root@localhost flask-hello-world]# docker network inspect none

5[

6 {

7 "Name": "none",

8 "Id": "14172bae898c2b81ba4926303e839f71128b021b277127910bfb974b4d4fcba4",

9 "Created": "2019-03-24T00:11:14.751327041+08:00",

10 "Scope": "local",

11 "Driver": "null",

12 "EnableIPv6": false,

13 "IPAM": {

14 "Driver": "default",

15 "Options": null,

16 "Config": []

17 },

18 "Internal": false,

19 "Attachable": false,

20 "Ingress": false,

21 "ConfigFrom": {

22 "Network": ""

23 },

24 "ConfigOnly": false,

25 "Containers": {

26 "793d4d074babf6fea972261ed774caf08e8541cee7c376c41e2084f9ea4ff4c1": {

27 "Name": "test4",

28 "EndpointID": "c650d6269560784dbb03215f4a88e6416b722f91f16ee2bd55dc8aebafda1178",

29 "MacAddress": "",

30 "IPv4Address": "",

31 "IPv6Address": ""

32 }

33 },

34 "Options": {},

35 "Labels": {}

36 }

37]

38

39# 进入test4 发现只有回环接口

40[root@localhost flask-hello-world]# docker exec test4 ip a

411: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

42 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

43 inet 127.0.0.1/8 scope host lo

44 valid_lft forever preferred_lft forever

452: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

46 link/ipip 0.0.0.0 brd 0.0.0.0

Host Network

- 通过 host 创建的容器是共享本地的 Network Namespace 的。

1# 创建test5容器并且制定网络类型host

2[root@localhost flask-hello-world]# docker run -d --name test5 --network host busybox /bin/sh -c "while true; do sleep 3600; done"

3

4# 发现test5的Network Namespace与本机的一样,因此可以判断通过host创建的容器是共享本地的Network Namespace的。

5[root@localhost flask-hello-world]# docker exec test5 ip a

6

71: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

8 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

9 inet 127.0.0.1/8 scope host lo

10 valid_lft forever preferred_lft forever

11 inet6 ::1/128 scope host

12 valid_lft forever preferred_lft forever

132: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

14 link/ether 00:0c:29:3d:e4:f6 brd ff:ff:ff:ff:ff:ff

15 inet 192.168.31.210/24 brd 192.168.31.255 scope global eno16777736

16 valid_lft forever preferred_lft forever

17 inet6 fe80::20c:29ff:fe3d:e4f6/64 scope link

18 valid_lft forever preferred_lft forever

193: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue

20 link/ether 02:42:26:09:df:f1 brd ff:ff:ff:ff:ff:ff

21 inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

22 valid_lft forever preferred_lft forever

23 inet6 fe80::42:26ff:fe09:dff1/64 scope link

24 valid_lft forever preferred_lft forever

254: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

26 link/ipip 0.0.0.0 brd 0.0.0.0

27146: veth7e65841@if145: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0

28 link/ether 8a🇧🇩28:39:22:b8 brd ff:ff:ff:ff:ff:ff

29 inet6 fe80::88bd:28ff:fe39:22b8/64 scope link

30 valid_lft forever preferred_lft forever

31150: veth8840821@if149: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0

32 link/ether 36:f2:e2:24:7b:29 brd ff:ff:ff:ff:ff:ff

33 inet6 fe80::34f2:e2ff:fe24:7b29/64 scope link

34 valid_lft forever preferred_lft forever

35156: veth57ea7d0@if155: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0

36 link/ether fe:91:6a:13🇦🇫6b brd ff:ff:ff:ff:ff:ff

37 inet6 fe80::fc91:6aff:fe13:af6b/64 scope link

38 valid_lft forever preferred_lft forever

39157: br-0eb1d64f0e7d: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue

40 link/ether 02:42:e9:25:74:1a brd ff:ff:ff:ff:ff:ff

41 inet 172.18.0.1/16 brd 172.18.255.255 scope global br-0eb1d64f0e7d

42 valid_lft forever preferred_lft forever

43 inet6 fe80::42:e9ff:fe25:741a/64 scope link

44 valid_lft forever preferred_lft forever

45159: veth1cfd21f@if158: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master br-0eb1d64f0e7d

46 link/ether a6:85:53:8c:dd:66 brd ff:ff:ff:ff:ff:ff

47 inet6 fe80::a485:53ff:fe8c:dd66/64 scope link

48 valid_lft forever preferred_lft forever

49161: vethe58898c@if160: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master br-0eb1d64f0e7d

50 link/ether 92:f8:fe:f4:0d:a7 brd ff:ff:ff:ff:ff:ff

51 inet6 fe80::90f8:feff:fef4:da7/64 scope link

52 valid_lft forever preferred_lft forever

53165: veth8c891c7@if164: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0

54 link/ether da:2f:65:64:34:ff brd ff:ff:ff:ff:ff:ff

55 inet6 fe80::d82f:65ff:fe64:34ff/64 scope link

56 valid_lft forever preferred_lft forever

57169: veth4b84431@if168: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue master docker0

58 link/ether 46:51:31:3f:14:42 brd ff:ff:ff:ff:ff:ff

59 inet6 fe80::4451:31ff:fe3f:1442/64 scope link

60 valid_lft forever preferred_lft forever

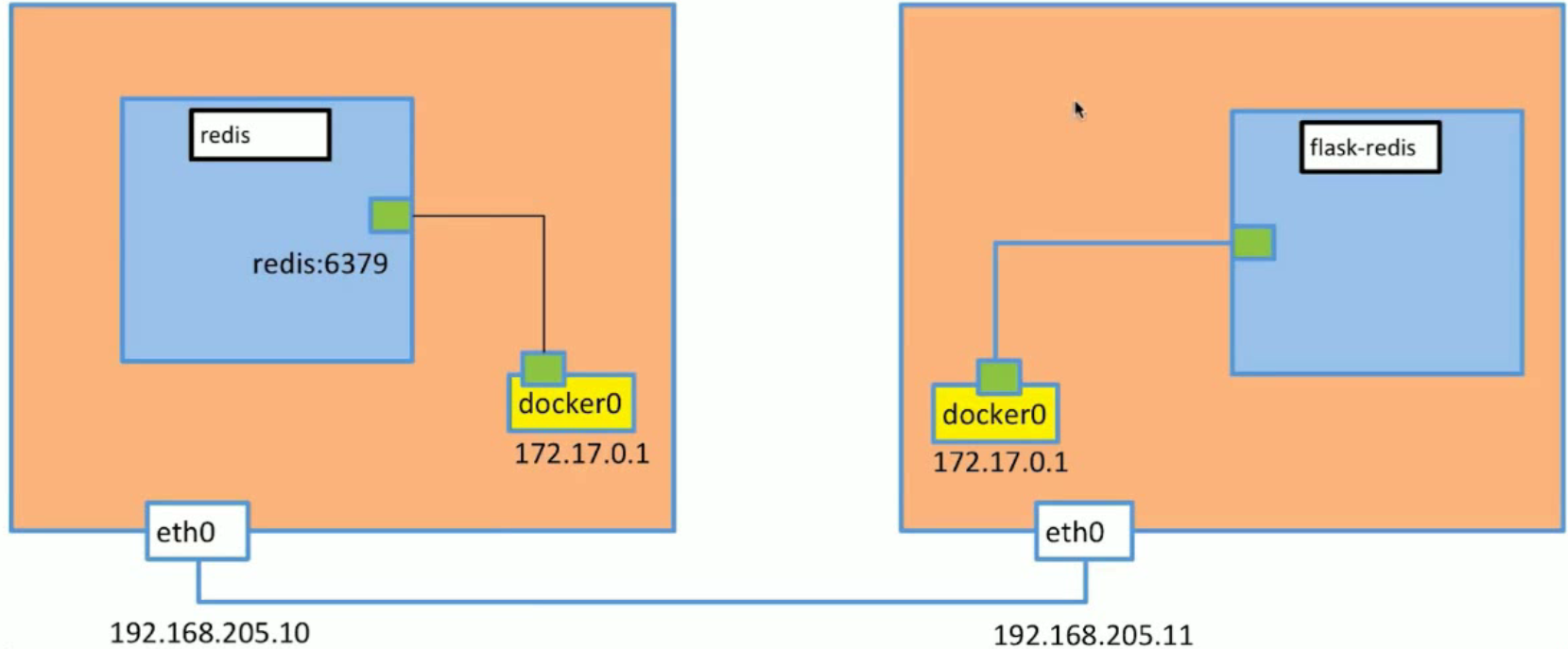

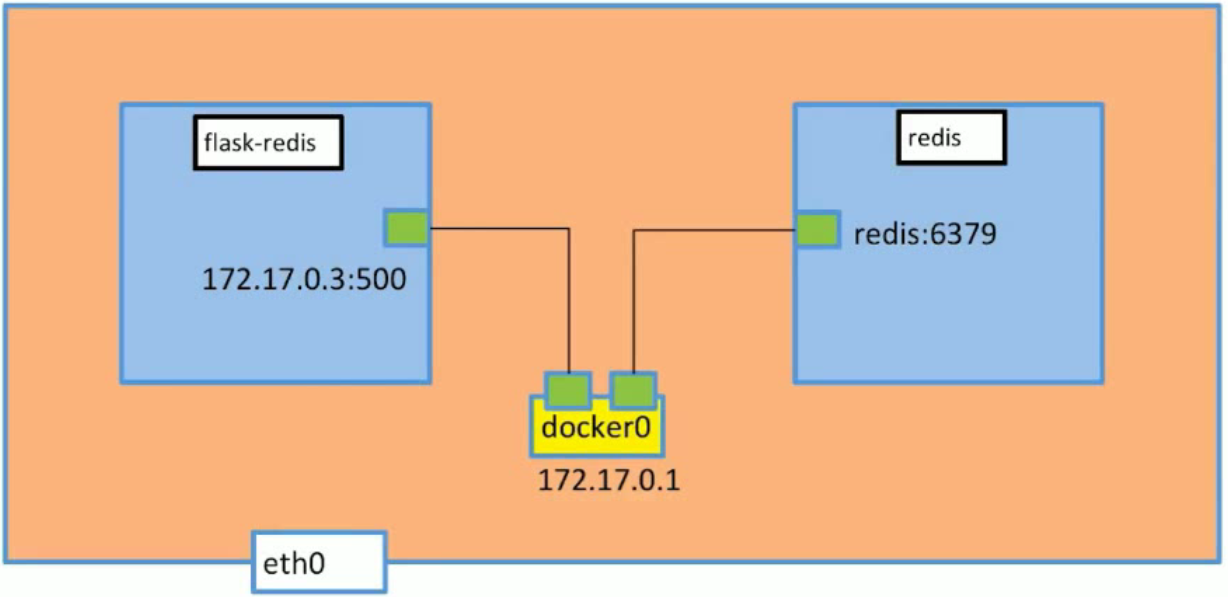

多容器复杂应用部署:参数传递

1# Dockerfile

2[root@localhost flask-redis]# cat Dockerfile

3FROM python:2.7

4LABEL maintaner="xxx"

5COPY . /app

6WORKDIR /app

7RUN pip install flask redis -i http://pypi.douban.com/simple --trusted-host pypi.douban.com

8EXPOSE 5000

9CMD [ "python", "app.py" ]

10

11# app.py

12[root@localhost flask-redis]# cat app.py

13from flask import Flask

14from redis import Redis

15import os

16import socket

17

18app = Flask(__name__)

19

20redis = Redis(host=os.environ.get('REDIS_HOST', '127.0.0.1'), port=6379)

21

22@app.route('/')

23def hello():

24 redis.incr('hits')

25 return 'Hello Container World! I have been seen %s times and my hostname is %s.\n' % (redis.get('hits'),socket.gethostname())

26

27if __name__ == "__main__":

28

29# 构建镜像flask-redis

30[root@localhost flask-redis]# docker build -t rtsfan1024/flask-redis .

31

32# 启动redis容器

33[root@localhost flask-redis]# docker run -d --name redis_one redis

34

35# 启动flask-redis容器 并且将reids_one容器作为参数传入给创建容器的REDIS_HOST的值。

36[root@localhost flask-redis]# docker run -d -p 5000:5000 --link redis_one --name flask-redis -e REDIS_HOST=redis_one rtsfan1024/flask-redis

37

38# 在本机测试发现flask-redis容器可以成功访问reids容器:显示容器之间的互通。

39[root@localhost flask-redis]# curl 192.168.31.220:5000

40Hello Container World! I have been seen 1 times and my hostname is e1103ff7eec1.

41

42# 进入容器flask-redis 查看环境变量REDIS_HOST的值

43[root@localhost flask-redis]# docker exec -it flask-redis /bin/bash

44root@e1103ff7eec1:/app# env | grep REDIS_HOST

45REDIS_HOST=redis_one

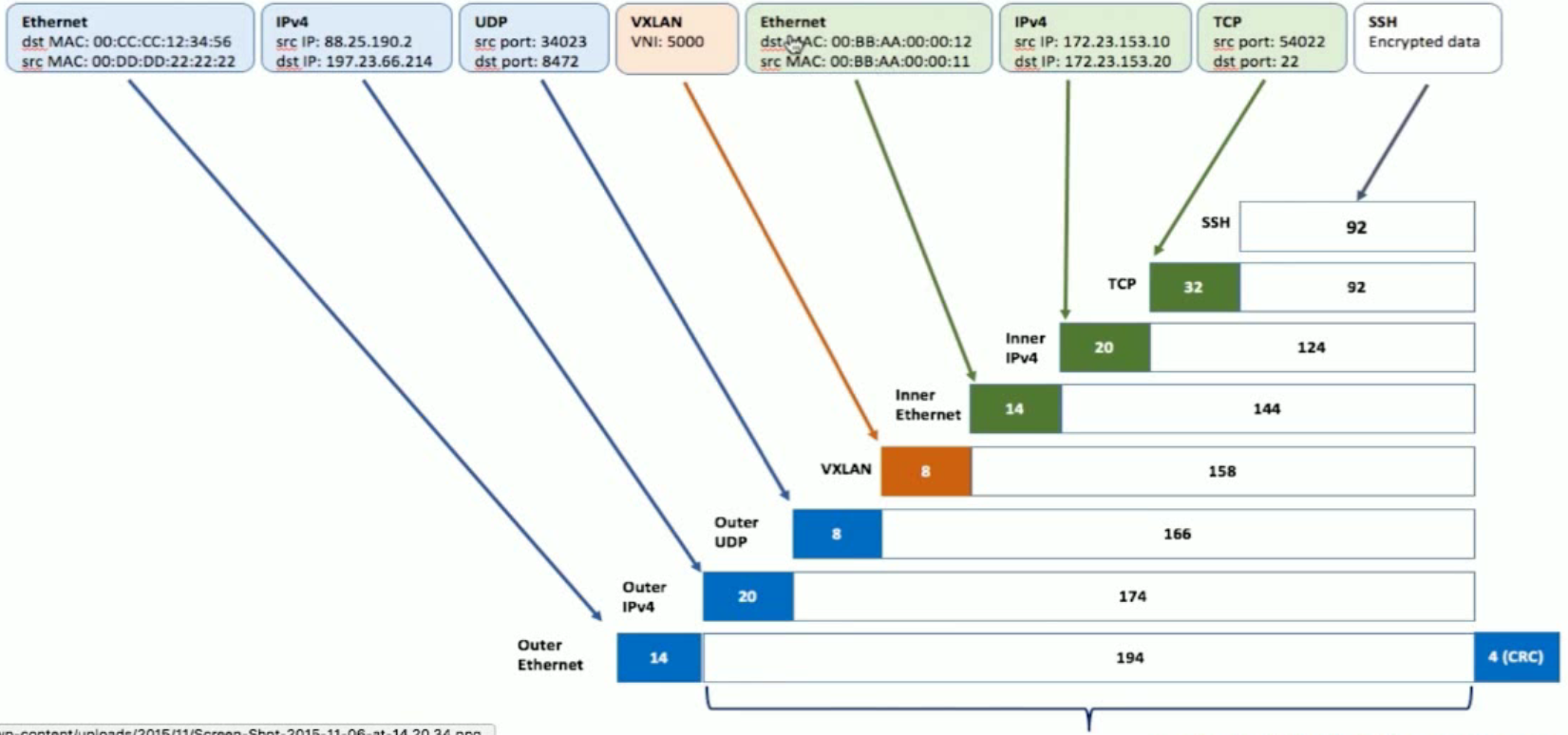

多机器通信:overlay